In automotive applications, the goal of vertical dynamics design is to optimize the vehicle chassis suspension so that certain objectives, such as ride comfort and vehicle stability, are fulfilled. The main objective is the concurrent maximization of ride comfort for vehicle passengers and road holding of the tires. Novel control approaches are needed to achieve a higher degree of automation in the controller design process and various influencing factors such as variable loads, different road surfaces, tire types, tire pressures, and weather conditions should be considered. To achieve this goal, the suspension manufacturer KW automotive GmbH and DLR’s Vehicle System Dynamics department have teamed up in the KIFAHR project. During the project, the potential of intelligent learning methods, particularly reinforcement learning (RL), for controlling semi-active dampers in the chassis area was shown. Reinforcement learning methods represent a promising approach to learning the control law by means of automatized processes. Several publications have demonstrated the power of reinforcement learning in the field of control engineering mostly for active control variables. However, the usage of RL methods on semi-active vertical dynamics control systems is a new field in research.

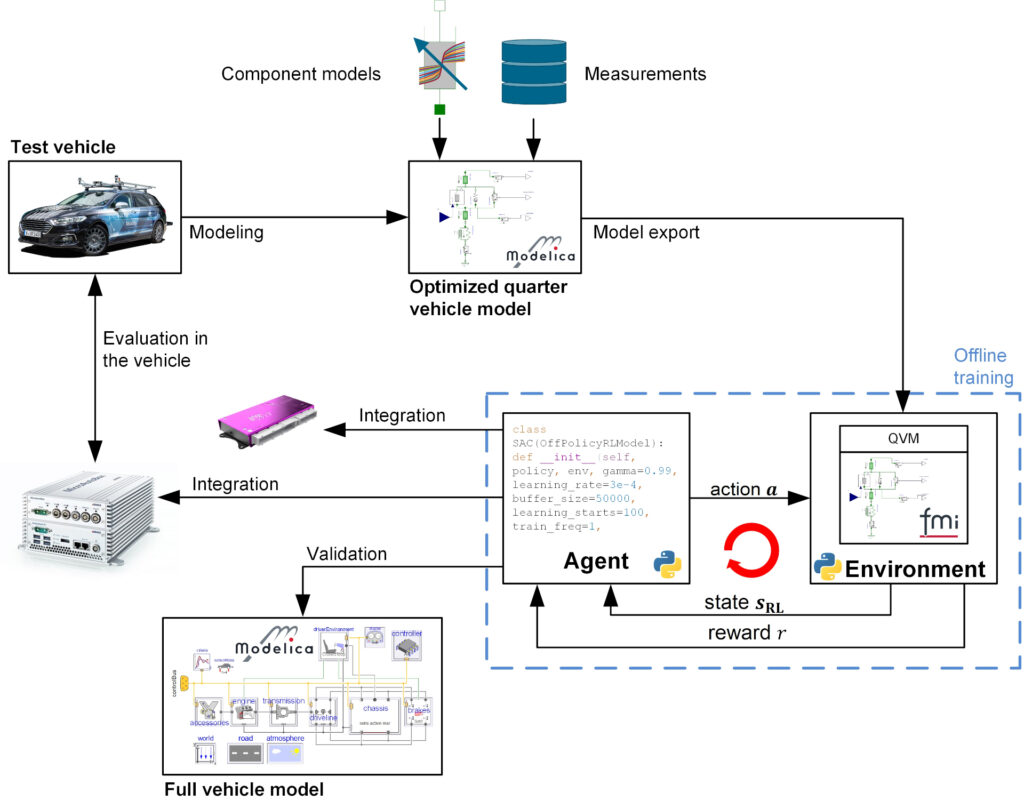

The project ran through the whole RL toolchain process depicted in the figure above. The test vehicle for the project is the DLR departments test vehicle AI For Mobility (AFM). The project started by taking extensive measurement data of the whole vehicle on the KW automotive seven post test rig and measuring individual components, such as bushings or bump stops, on various high fidelity test rigs. After completing the first stage, the obtained data and in-depth system knowledge were used to generate control plant training models of the vehicle’s vertical dynamics for the RL training. DLR SR’s own optimization tools and libraries heavily supported this process. During the optimization process, not only the parameters of the training models were fitted, but also the structure of the model was optimized. In addition, vehicle models of varying complexity and simulation duration were created depending on the intended use. Both data-based modeling methods based on neural networks and physically motivated methods were used to identify the damper dynamics.

For training of the controller, controller neural network export, and controller validation, new software toolchains were developed, or existing tool chains were comprehensively extended and adapted for the application purpose. The training model was validated and included in the newly adapted RL toolchain. The toolchain is capable of automatically training RL algorithms on different environments including different training scenarios and comparing the obtained agents on specified evaluation metrics and scenarios. After reiterating and optimizing the training process several times, the best agents were selected for validation on a full-vehicle model. To assess the performance of the learned controller, the agent was exported as C code and included in a Rapid-Control-Prototyping System. In a final step, the performance of the trained controller was evaluated on the seven-post-test rig and compared to a state-of-the-art combined Skyhook & Groundhook Controller. To get a reasonable comparison, the parameters of the Skyhook & Groundhook Controller were also obtained by numerical optimization on the control plant training models. In summary, a new and powerful AI-based controller for vertical dynamics control was developed within the project and implemented via code generation toolbox for neural networks on an in vehicle micro controller. In order to be able to guarantee the safe execution of the controller, a safeguarding concept was developed and tested in real driving tests.

The performance of the RL-base controller learned by means of a validated control plant model was confirmed both qualitatively in real driving tests and quantitatively by measurements on the seven-post vertical dynamic test rig. In conclusion it can be summarized that reinforcement learning methods for vertical dynamics control can qualitatively and quantitatively outperform a state of the art controller in a manifold of driving maneuvers. The results of the project show the potential of RL methods for vertical dynamics control applications. During the project, new powerful software tools and capable hardware supporting the RL design process and ensuring safe operation have been developed. Further research will address the refinement of the whole process with technologies such as physics informed learning.

Literature: Project report, Paper “Reinforcement Learning for Semi-Active Vertical Dynamics Control with Real-World Tests”

This work was funded by the Federal Ministry of Education and Research (Germany), grant number 01IS20010A, in the project „KIFAHR: KI-basierte Fahrwerksregelung“.